케라스 강좌 내용

https://tykimos.github.io/Keras/lecture/

https://www.facebook.com/groups/TensorFlowKR/permalink/498759757131754/

https://leonardoaraujosantos.gitbooks.io/artificial-inteligence/content/

https://www.gitbook.com/book/leonardoaraujosantos/artificial-inteligence/details

안녕하세요.

오늘 공유드릴 것은 머신러닝/딥러닝 전반의 내용을 담고 있는 ebook입니다.

선형대수부터 해서, classification 계열의 정복자들 (residual net 등), detection 계열의 정복자들 (Yolo 등), 강화학습 뭐 거의 다 다루고 있다고 해도 무방하네요.

https://leonardoaraujosantos.gitbooks.io/artificial-inte…/…/

pdf로 다운 받으셔서 보실 수도 있습니다.

https://www.gitbook.com/…/le…/artificial-inteligence/details

대략 살펴보니 입문자에게도 부담없을 정도로 자세하게 설명이 되어 있는 것 같습니다.

한 사람이 정리한 듯 한데, 만약 맞다면 대단한 정리네요;;;

https://www.facebook.com/nextobe1/posts/341026106333391

LSTM을 이용한 감정 분석 w/ Tensorflow

O'Reilly의 서버에서 미리 빌드된 환경에서 이 코드를 셀 단위로 실행하거나 자신의 컴퓨터에서 이 파일을 실행할 수 있습니다. 또한 사전 에 Pre-Trained LSTM.ipynb로 자신 만의 텍스트를 입력하고 훈련된 네트워크의 출력을 볼 수 있습니다.

| deploy a CNN that could understand 10 signs or hand gestures. (0) | 2018.02.22 |

|---|---|

| Quick complete Tensorflow tutorial to understand and run Alexnet, VGG, Inceptionv3, Resnet and squeezeNet networks (0) | 2017.07.26 |

| Python TensorFlow Tutorial – Build a Neural Network (0) | 2017.04.11 |

| Time Series Forecasting with Python 7-Day Mini-Course (0) | 2017.03.24 |

| TensorFlow: training on my own image (0) | 2017.03.21 |

https://kr.mathworks.com/help/images/ref/imhistmatch.html

imhistmatch

Adjust histogram of 2-D image to match histogram of reference image

B = imhistmatch(A,ref)B = imhistmatch(A,ref,nbins)[B,hgram] = imhistmatch(___)B = imhistmatch(A,ref)A returning output image B whose histogram approximately matches the histogram of the reference image ref.

If both A and ref are truecolor RGB images, imhistmatch matches each color channel of A independently to the corresponding color channel of ref.

If A is a truecolor RGB image and ref is a grayscale image, imhistmatch matches each channel of A against the single histogram derived from ref.

If A is a grayscale image, ref must also be a grayscale image.

Images A and ref can be any of the permissible data types and need not be equal in size.

B = imhistmatch(A,ref,nbins)nbins equally spaced bins within the appropriate range for the given image data type. The returned image B has no more than nbins discrete levels.

If the data type of the image is either single or double, the histogram range is [0, 1].

If the data type of the image is uint8, the histogram range is [0, 255].

If the data type of the image is uint16, the histogram range is [0, 65535].

If the data type of the image is int16, the histogram range is [-32768, 32767].

[ returns the histogram of the reference image B,hgram] = imhistmatch(___)ref used for matching in hgram. hgram is a 1-by-nbins (when ref is grayscale) or a 3-by-nbins (when ref is truecolor) matrix, where nbins is the number of histogram bins. Each row in hgram stores the histogram of a single color channel of ref.

The objective of imhistmatch is to transform image A such that the histogram of image B matches the histogram derived from image ref. It consists of nbins equally spaced bins which span the full range of the image data type. A consequence of matching histograms in this way is that nbins also represents the upper limit of the number of discrete data levels present in image B.

An important behavioral aspect of this algorithm to note is that as nbins increases in value, the degree of rapid fluctuations between adjacent populated peaks in the histogram of image B tends to increase. This can be seen in the following histogram plots taken from the 16–bit grayscale MRI example.

An optimal value for nbins represents a trade-off between more output levels (larger values of nbins) while minimizing peak fluctuations in the histogram (smaller values of nbins).

histeq | imadjust | imhist | imhistmatchn

| matlab code - convert face landmarks matlab .mat to .pts file (0) | 2018.06.07 |

|---|---|

| matlab audio read write (0) | 2017.12.20 |

| matlab figure 파일을 300dpi로 저장하는 법 - How can I save a figure to a TIFF image with resolution 300 dpi in MATLAB? (0) | 2017.03.27 |

| matlab TreeBagger 예제 나와있는 페이지 (0) | 2017.03.11 |

| MATLAB Treebagger and Random Forests (0) | 2017.03.11 |

| 머신러닝 용어 https://developers.google.com/machine-learning/glossary/ (0) | 2017.09.28 |

|---|---|

| 30개의 필수 데이터과학, 머신러닝, 딥러닝 치트시트 (0) | 2017.09.26 |

| Beginning Machine Learning – A few Resources [Subjective] (0) | 2017.06.20 |

| SNU TF 스터디 모임 1기 때부터 쭉 모아온 발표자료들 (0) | 2017.04.14 |

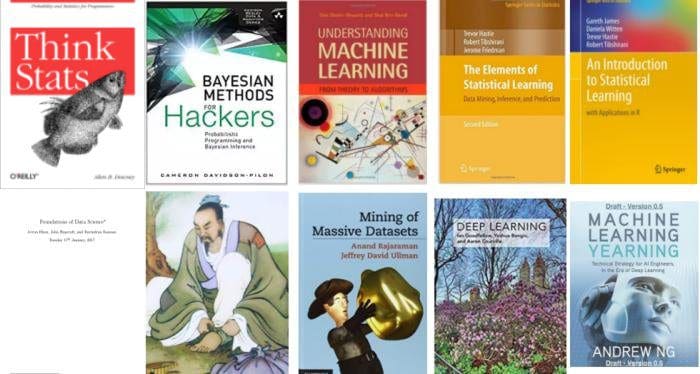

| List of Free Must-Read Books for Machine Learning (0) | 2017.04.06 |

http://cristivlad.com/beginning-machine-learning-a-few-resources-subjective/

I’ve been meaning to write this post for a while now, because many people following the scikit-learn video tutorials and the ML group are asking for direction, as in resources for those who are just starting out.

So, I decided to put up a short and subjective list with some of the resources I’d recommend for this purpose. I’ve used some of these resources when I started out with ML. Practically, there are unlimited free resources online. You just have to search, pick something, and start putting in the work, which is probably one of the most important aspects of learning and developing any skill.

Since most of these resources involve knowledge of programming (especially Python), I am assuming you have decent skills. If you don’t, I’d suggest learning to program first. I’ll write a post about that in the future, but until then, you could start, hands-on, with the free Sololearn platform.

The following resources include, but are not limited to books, courses, lectures, posts, and Jupyter notebooks, just to name a few.

In my opinion, skill development with more than one type of resource can be fruitful. Spreading yourself too thin by trying to learn from too many places at once could be detrimental though. To illustrate, here’s what I think of a potentially good approach to study ML in any given day (and I’d try to do skill development 6-7 days a week, for several hours each day):

– 1-2 hours reading from a programming book and coding along

– 1 hour watching a lecture, or a talk, or reading a research paper

– 30 minutes to 1 hour working through a course

– optional: reading 1-2 posts.

This would be a very intensive approach and it may lead to good results. These results are dependent of good sleep – in terms of quality (at night, at the right hours) and quantity (7-8 hours consistently).

Now, the short-list…

1.1. Machine Learning with Python – From CognitiveClass.ai

I put this on the top of the list because it not only goes through the basics of ML such as supervised vs. unsupervised learning, types of algorithms and popular models, but it also provides LABs, which are Jupyter notebooks where you practice what you learned during the video lectures. If you pass all weekly quizes and the final exam, you’ll obtain a free course certificate. I took this course a while ago.

1.2. Intro to Machine Learning – From Udacity

Taught by Sebastian Thrun and Katie Malone, this is a ~10-week, self-paced, very interactive course. The videos are very short, but engaging; there are more than 400 videos that you have to go through. I specifically like this one because it is very hands-on and engaging, so it requires your active input. I enjoyed going through the Enron dataset.

1.3. Machine Learning – From Udacity

Taught by Michael Littman, Charles Isbell, and Pushkar Kolhe, this is a ~4-month, self-paced course, offered as CS7641 at Georgia Tech and it’s part of their Online Masters Degree.

1.4. Principles of Machine Learning – From EDX, part of a Microsoft Program

Taught by Dr. Steve Elston and Cynthia Rudin. It’s a 6-week, intermediate level course.

1.5. Machine Learning Crash Course – from Berkeley

A 3-part series going through some of the most important concepts of ML. The accompanying graphics are ‘stellar’ and aid the learning process tremendously.

Of course, there are many more ML courses on these online learning platforms and on other platforms as well (do a search with your preferred search engine).

If you’re ML savvy, you may be wondering why I am not mentioning Ng’s course. It’s not that I don’t recommend it; on the contrary, I do. But I’d suggest going through it only after you have a solid knowledge of the basics.

Additionally, here are the materials from Stanford and MIT‘s two courses on machine learning. Some video lectures can be found in their Youtube playlists. Other big universities provide their courses on the open on Youtube or via other video sharing platforms. Find one or two and go through them diligently.

2.1. Python for Data Analysis – Wes Mckiney

– to lay the foundation of working with ML related tools and libraries

2.2. Python Machine Learning – Sebastian Raschka

– reference book.

2.3. Introduction to Machine Learning with Python – Andreas Muller and Sarah Guido

– I’ve been using this book as inspiration material in my ML Youtube video series.

Going through these books hands-on (coding along) is critical. Each of them have their github repository of Jupyter notebooks, which makes it even easier to get your hands on the code.

Strong ML skills imply solid knowledge of the mathematics, statistics and probability theory behind the algorithms, atop of the programming skills. Once you get the conceptualized knowledge of ML, you should be studying the complexities of it.

Here’s a list of free books and resources to help you along. It is relevant to ML and data mining, deep learning, natural language processing, neural networks, linear algebra (!!!), and probability and statistics (!!!).

3.1. Luis Serrano – A friendly Introduction to Machine Learning

– one of the most well explained video tutorials that I went through. No wonder Luis teaches with Udacity. His other videos on neural networks bring the same level of quality!

3.2. Roshan – Machine Learning – Video Series

– from setting up the environment to hands-on. Notebooks are also available.

3.3. Machine Learning with Scikit-Learn (Scipy 2016) – Part 1 and Part 2

– taught, hands-on, by Muller and Raschka. Notebooks are available in the description of the videos. Similar videos by these authors are available in the ‘recommended’ section (on the right of the video).

At this point I realized I’ve been using the word ‘hands-on’ way too much. But that’s okay. I guess you get the point.

3.4. Machine Learning with Python – Sentdex Playlist

– Sentdex needs no introduction. His current ML playlist consists of 72 videos.

3.5. Machine Learning with Scikit-Learn – Cristi Vlad Playlist

This is my own playlist. It currently has 27 videos and I’m posting new ones every few days. I’m working with scikit-learn on the cancer dataset and I explore different ML algorithms and models.

3.6. Machine Learning APIs by Example – Google Developers

– presented at the 2017 Google I/O Conference.

3.7. Practical Introduction to Machine Learning – Same Hames

– tutorial from 2016 PyCon Australia.

3.8. Machine Learning Recipes – with Josh Gordon

– from Google Developers.

To find similar channels you can search for anything related to ‘pycon’, ‘pydata’, ‘enthought python’, etc. I also remind you that many top universities and companies posts their courses, lectures, and talks on their video channels. Look them up.

4.1. Machine Learning 101 – from BigML

“In this page you will find a set of useful articles, videos and blog posts from independent experts around the world that will gently introduce you to the basic concepts and techniques of Machine Learning.”

4.2. Learning Machine Learning – EliteDataScience

4.3. Top-down learning path: Machine Learning for Software Engineers

– a collection of resources from a self-taught software engineer, Nam Vu, who purposed to study roughly 4 hours a night.

4.4. Machine Learning Mastery – by Dr. Jason Brownlee

To reiterate, there is an unlimited number of free and paid resources that you can learn from. To try to include too many is futile and could be counterproductive. Here I only presented a few personal picks and I suggested ways to search for others if these do not appeal to you.

Remember, to be successful in skill development, I’d recommend an eclectic approach by learning and practicing from a combination of different types of resources at the same time (just a few) for a couple of hours everyday.

Learning from courses, hands-on lectures, talks, and presentations, books (hands-on) and Jupyter notebooks is a very demanding and intensive approach that could lead to good results if you are consistent. Good sleep is crucial for skill development. Enjoy the ride!

Image: here.

| 30개의 필수 데이터과학, 머신러닝, 딥러닝 치트시트 (0) | 2017.09.26 |

|---|---|

| 데이터과학 및 딥러닝을 위한 데이터세트 (0) | 2017.07.04 |

| SNU TF 스터디 모임 1기 때부터 쭉 모아온 발표자료들 (0) | 2017.04.14 |

| List of Free Must-Read Books for Machine Learning (0) | 2017.04.06 |

| Regularization resources in matlab (0) | 2017.04.05 |

| Machine Learning · Artificial Inteligence 웹북. 딥러닝 기초 도움 (0) | 2017.10.31 |

|---|---|

| Deep Learning Lecture Collection (Spring 2017): http://www.youtube.com/playlist?list=PL3FW7Lu3i5JvHM8ljYj-zLfQRF3EO8sYv (0) | 2017.08.13 |

| NumPy for Matlab Users (0) | 2017.05.29 |

| Python Numpy Tutorial (0) | 2017.05.29 |

| Videos to learn about AI, Machine Learning & Deep Learning (0) | 2017.05.09 |

| India’s IIS and NIT Develops AI To Identify Protesters With Their Faces Partly Covered With Scarves or Hat (0) | 2017.09.10 |

|---|---|

| 머신러닝/딥러닝 전반의 내용을 담고 있는 ebook (0) | 2017.07.07 |

| PyTorch Tutorial (0) | 2017.05.13 |

| TensorFlow Tutorial #16 Reinforcement Learning (0) | 2017.04.24 |

| How to Use Timesteps in LSTM Networks for Time Series Forecasting - Machine Learning Mastery (0) | 2017.04.18 |

https://kr.mathworks.com/help/images/ref/tformfwd.html

http://web.mit.edu/emeyers/www/warping/warp.html

https://gist.github.com/jack06215/b8af25d366bf4c2307f2

https://kr.mathworks.com/help/images/ref/projective2d-class.html

https://kr.mathworks.com/discovery/affine-transformation.html

https://stackoverflow.com/questions/30437467/how-imwarp-transfer-points-in-matlab

http://www.openu.ac.il/home/hassner/projects/cnn_agegender/

https://github.com/GilLevi/AgeGenderDeepLearning/issues/1

http://www.openu.ac.il/home/hassner/Adience/EidingerEnbarHassner_tifs.pdf

https://kr.mathworks.com/matlabcentral/answers/168461-help-with-imwarp-and-affine2d

https://kr.mathworks.com/help/images/ref/imtransform.html

https://stackoverflow.com/questions/25205635/how-to-register-face-by-landmark-points

https://stackoverflow.com/questions/18925181/procrustes-analysis-with-numpy

https://stackoverflow.com/questions/20242826/matlab-face-alignment-code

http://www.cse.wustl.edu/~taoju/cse554/lectures/lect07_Alignment.pdf

https://kr.mathworks.com/help/images/ref/fitgeotrans.html

https://kr.mathworks.com/help/images/ref/imregtform.html

https://kr.mathworks.com/help/images/ref/imtransform.html

https://cmusatyalab.github.io/openface/

http://elijah.cs.cmu.edu/DOCS/CMU-CS-16-118.pdf

https://kr.mathworks.com/matlabcentral/fileexchange/3025-affine-transformation

https://kr.mathworks.com/matlabcentral/fileexchange/4364-procrustes-problem

https://www.mathworks.com/examples/matlab/community/20690-procrustes-shape-analysis

https://kr.mathworks.com/matlabcentral/newsreader/view_thread/311960http://kr.mathworks.com/help/stats/procrustes.html

https://cmusatyalab.github.io/openface/

http://what-when-how.com/face-recognition/face-alignment-models-face-image-modeling-and-representation-face-recognition-part-1/https://kr.mathworks.com/matlabcentral/answers/287058-face-normalisation-using-affine-transform

https://kr.mathworks.com/matlabcentral/fileexchange/35106-the-phd-face-recognition-toolbox?focused=5217480&tab=function

| keras Sequential, Functional, and Model Subclassing 설명 사이트 (0) | 2021.02.15 |

|---|---|

| skin detection (0) | 2017.03.29 |

| 파이참 설치 및 사용법법 & Jupyter 서버 설치 및 실행법 (0) | 2017.03.27 |

| 20170228_roc curve area under of them (0) | 2017.03.01 |

| 20170212 mssql 테이블 수정 (0) | 2017.02.12 |

https://github.com/GilLevi/AgeGenderDeepLearning/issues/1

Hi,

Thank you for your interest in our work.

Alignment is done by detecting about 68 facial landmark and applying an affine transformation to a predefined set of locations for those 68 landmarks.

I didn't understand what you mean by the size of the face in the image. Can you please elaborate?

Best,

Gil

| Compare Handwritten Shapes Using Procrustes Analysis (0) | 2019.07.02 |

|---|---|

| GrabCut, GraphCut matlab Source code (0) | 2018.06.18 |

| Procrustes shape analysis 설명 잘 돼 있음 (0) | 2017.06.02 |

| Plotting and Intrepretating an ROC Curve (0) | 2017.03.01 |

| awesome-object-proposals (0) | 2017.02.28 |

Alex Townsend, August 2011

(Chebfun Example geom/Procrustes.m)

Procrustes shape analysis is a statistical method for analysing the distribution of sets of shapes (see [1]). Let's suppose we pick up a pebble from the beach and want to know how close its shape matches the outline of an frisbee. Here is a plot of the frisbee and the beach pebble.

Two shapes are equivalent if one can be obtained from the other by translating, scaling and rotating. Before comparison we thus:

1. Translate the shapes so they have mean zero.

2. Scale so the shapes have Root Mean Squared Distance (RMSD) to the origin of one.

3. Rotate to align major axis.

Here is how the frisbee and the pebble compare after each stage.

To calculate the Procrustes distance we would measure the error between the two shapes at a finite number of reference points and compute the vector 2-norm. In this discrete case In Chebfun we calculate the continuous analogue:

In the discrete version of Procrustes shape analysis statisticians choose reference points on the two shapes (to compare). They then work out the difference between corresponding reference points. The error computed depends on this correspondence. A different correspondence gives a different error. In the continuous case this correspondence becomes the parameterisation. A different parameterisation of the two curves gives a different error. This continuous version of Procrustes (as implemented in this example) is therefore more of an 'eye-ball' check than a robust statistical analysis.

At the beach shapes reflect on the surface of the sea. An interesting question is: How close, in shape, is a pebble to its reflection? Here is a plot of a pebble and its reflection.

Here is how the pebble and its reflection compare after each stage of translating, scaling and rotating.

Now we calculate the continuous Procrustes distance.

Comparing this result to the Procrustes distance of the pebble and a frisbee shows that the pebble is closer in shape to a frisbee than its own reflection!

Contents

MATLAB® and !NumPy/!SciPy have a lot in common. But there are many differences. NumPy and SciPy were created to do numerical and scientific computing in the most natural way with Python, not to be MATLAB® clones. This page is intended to be a place to collect wisdom about the differences, mostly for the purpose of helping proficient MATLAB® users become proficient NumPy and SciPy users. NumPyProConPage is another page for curious people who are thinking of adopting Python with NumPy and SciPy instead of MATLAB® and want to see a list of pros and cons.

|

In MATLAB®, the basic data type is a multidimensional array of double precision floating point numbers. Most expressions take such arrays and return such arrays. Operations on the 2-D instances of these arrays are designed to act more or less like matrix operations in linear algebra. |

In NumPy the basic type is a multidimensional array. Operations on these arrays in all dimensionalities including 2D are elementwise operations. However, there is a special matrix type for doing linear algebra, which is just a subclass of the array class. Operations on matrix-class arrays are linear algebra operations. |

|

MATLAB® uses 1 (one) based indexing. The initial element of a sequence is found using a(1). See note 'INDEXING' |

Python uses 0 (zero) based indexing. The initial element of a sequence is found using a[0]. |

|

MATLAB®'s scripting language was created for doing linear algebra. The syntax for basic matrix operations is nice and clean, but the API for adding GUIs and making full-fledged applications is more or less an afterthought. |

NumPy is based on Python, which was designed from the outset to be an excellent general-purpose programming language. While Matlab's syntax for some array manipulations is more compact than NumPy's, NumPy (by virtue of being an add-on to Python) can do many things that Matlab just cannot, for instance subclassing the main array type to do both array and matrix math cleanly. |

|

In MATLAB®, arrays have pass-by-value semantics, with a lazy copy-on-write scheme to prevent actually creating copies until they are actually needed. Slice operations copy parts of the array. |

In NumPy arrays have pass-by-reference semantics. Slice operations are views into an array. |

|

In MATLAB®, every function must be in a file of the same name, and you can't define local functions in an ordinary script file or at the command-prompt (inlines are not real functions but macros, like in C). |

NumPy code is Python code, so it has no such restrictions. You can define functions wherever you like. |

|

MATLAB® has an active community and there is lots of code available for free. But the vitality of the community is limited by MATLAB®'s cost; your MATLAB® programs can be run by only a few. |

!NumPy/!SciPy also has an active community, based right here on this web site! It is smaller, but it is growing very quickly. In contrast, Python programs can be redistributed and used freely. See Topical_Software for a listing of free add-on application software, Mailing_Lists for discussions, and the rest of this web site for additional community contributions. We encourage your participation! |

|

MATLAB® has an extensive set of optional, domain-specific add-ons ('toolboxes') available for purchase, such as for signal processing, optimization, control systems, and the whole SimuLink® system for graphically creating dynamical system models. |

There's no direct equivalent of this in the free software world currently, in terms of range and depth of the add-ons. However the list in Topical_Software certainly shows a growing trend in that direction. |

|

MATLAB® has a sophisticated 2-d and 3-d plotting system, with user interface widgets. |

Addon software can be used with Numpy to make comparable plots to MATLAB®. Matplotlib is a mature 2-d plotting library that emulates the MATLAB® interface. PyQwt allows more robust and faster user interfaces than MATLAB®. And mlab, a "matlab-like" API based on Mayavi2, for 3D plotting of Numpy arrays. See the Topical_Software page for more options, links, and details. There is, however, no definitive, all-in-one, easy-to-use, built-in plotting solution for 2-d and 3-d. This is an area where Numpy/Scipy could use some work. |

|

MATLAB® provides a full development environment with command interaction window, integrated editor, and debugger. |

Numpy does not have one standard IDE. However, the IPython environment provides a sophisticated command prompt with full completion, help, and debugging support, and interfaces with the Matplotlib library for plotting and the Emacs/XEmacs editors. |

|

MATLAB® itself costs thousands of dollars if you're not a student. The source code to the main package is not available to ordinary users. You can neither isolate nor fix bugs and performance issues yourself, nor can you directly influence the direction of future development. (If you are really set on Matlab-like syntax, however, there is Octave, another numerical computing environment that allows the use of most Matlab syntax without modification.) |

NumPy and SciPy are free (both beer and speech), whoever you are. |

Use arrays.

The only disadvantage of using the array type is that you will have to use dot instead of * to multiply (reduce) two tensors (scalar product, matrix vector multiplication etc.).

Numpy contains both an array class and a matrix class. The array class is intended to be a general-purpose n-dimensional array for many kinds of numerical computing, while matrix is intended to facilitate linear algebra computations specifically. In practice there are only a handful of key differences between the two.

Operator *, dot(), and multiply():

For array, '*' means element-wise multiplication, and the dot() function is used for matrix multiplication.

For matrix, '*' means matrix multiplication, and the multiply() function is used for element-wise multiplication.

For array, the vector shapes 1xN, Nx1, and N are all different things. Operations like A[:,1] return a rank-1 array of shape N, not a rank-2 of shape Nx1. Transpose on a rank-1 array does nothing.

For matrix, rank-1 arrays are always upconverted to 1xN or Nx1 matrices (row or column vectors). A[:,1] returns a rank-2 matrix of shape Nx1.

Handling of higher-rank arrays (rank > 2)

array objects can have rank > 2.

matrix objects always have exactly rank 2.

array has a .T attribute, which returns the transpose of the data.

matrix also has .H, .I, and .A attributes, which return the conjugate transpose, inverse, and asarray() of the matrix, respectively.

The array constructor takes (nested) Python sequences as initializers. As in, array([[1,2,3],[4,5,6]]).

The matrix constructor additionally takes a convenient string initializer. As in matrix("[1 2 3; 4 5 6]").

There are pros and cons to using both:

array

![]() You can treat rank-1 arrays as either row or column vectors. dot(A,v) treats v as a column vector, while dot(v,A) treats v as a row vector. This can save you having to type a lot of transposes.

You can treat rank-1 arrays as either row or column vectors. dot(A,v) treats v as a column vector, while dot(v,A) treats v as a row vector. This can save you having to type a lot of transposes.

![]() Having to use the dot() function for matrix-multiply is messy -- dot(dot(A,B),C) vs. A*B*C.

Having to use the dot() function for matrix-multiply is messy -- dot(dot(A,B),C) vs. A*B*C.

![]() Element-wise multiplication is easy: A*B.

Element-wise multiplication is easy: A*B.

![]() array is the "default" NumPy type, so it gets the most testing, and is the type most likely to be returned by 3rd party code that uses NumPy.

array is the "default" NumPy type, so it gets the most testing, and is the type most likely to be returned by 3rd party code that uses NumPy.

![]() Is quite at home handling data of any rank.

Is quite at home handling data of any rank.

![]() Closer in semantics to tensor algebra, if you are familiar with that.

Closer in semantics to tensor algebra, if you are familiar with that.

![]() All operations (*, /, +, ** etc.) are elementwise

All operations (*, /, +, ** etc.) are elementwise

matrix

![]() Behavior is more like that of MATLAB® matrices.

Behavior is more like that of MATLAB® matrices.

![]() Maximum of rank-2. To hold rank-3 data you need array or perhaps a Python list of matrix.

Maximum of rank-2. To hold rank-3 data you need array or perhaps a Python list of matrix.

![]() Minimum of rank-2. You cannot have vectors. They must be cast as single-column or single-row matrices.

Minimum of rank-2. You cannot have vectors. They must be cast as single-column or single-row matrices.

![]() Since array is the default in NumPy, some functions may return an array even if you give them a matrix as an argument. This shouldn't happen with NumPy functions (if it does it's a bug), but 3rd party code based on NumPy may not honor type preservation like NumPy does.

Since array is the default in NumPy, some functions may return an array even if you give them a matrix as an argument. This shouldn't happen with NumPy functions (if it does it's a bug), but 3rd party code based on NumPy may not honor type preservation like NumPy does.

![]() A*B is matrix multiplication, so more convenient for linear algebra.

A*B is matrix multiplication, so more convenient for linear algebra.

![]() Element-wise multiplication requires calling a function, multipy(A,B).

Element-wise multiplication requires calling a function, multipy(A,B).

![]() The use of operator overloading is a bit illogical: * does not work elementwise but / does.

The use of operator overloading is a bit illogical: * does not work elementwise but / does.

The array is thus much more advisable to use, but in the end, you don't really have to choose one or the other. You can mix-and-match. You can use array for the bulk of your code, and switch over to matrix in the sections where you have nitty-gritty linear algebra with lots of matrix-matrix multiplications.

Numpy has some features that facilitate the use of the matrix type, which hopefully make things easier for Matlab converts.

A matlib module has been added that contains matrix versions of common array constructors like ones(), zeros(), empty(), eye(), rand(), repmat(), etc. Normally these functions return arrays, but the matlib versions return matrix objects.

mat has been changed to be a synonym for asmatrix, rather than matrix, thus making it concise way to convert an array to a matrix without copying the data.

Some top-level functions have been removed. For example numpy.rand() now needs to be accessed as numpy.random.rand(). Or use the rand() from the matlib module. But the "numpythonic" way is to use numpy.random.random(), which takes a tuple for the shape, like other numpy functions.

The table below gives rough equivalents for some common MATLAB® expressions. These are not exact equivalents, but rather should be taken as hints to get you going in the right direction. For more detail read the built-in documentation on the NumPy functions.

Some care is necessary when writing functions that take arrays or matrices as arguments --- if you are expecting an array and are given a matrix, or vice versa, then '*' (multiplication) will give you unexpected results. You can convert back and forth between arrays and matrices using

asarray: always returns an object of type array

asmatrix or mat: always return an object of type matrix

asanyarray: always returns an array object or a subclass derived from it, depending on the input. For instance if you pass in a matrix it returns a matrix.

These functions all accept both arrays and matrices (among other things like Python lists), and thus are useful when writing functions that should accept any array-like object.

In the table below, it is assumed that you have executed the following commands in Python:

from numpy import *

import scipy as Sci

import scipy.linalg

Also assume below that if the Notes talk about "matrix" that the arguments are rank 2 entities.

THIS IS AN EVOLVING WIKI DOCUMENT. If you find an error, or can fill in an empty box, please fix it! If there's something you'd like to see added, just add it.

|

MATLAB |

numpy |

Notes | |

|

help func |

info(func) or help(func) or func? (in Ipython) |

get help on the function func | |

|

which func |

find out where func is defined | ||

|

type func |

source(func) or func?? (in Ipython) |

print source for func (if not a native function) | |

|

a && b |

a and b |

short-circuiting logical AND operator (Python native operator); scalar arguments only | |

|

a || b |

a or b |

short-circuiting logical OR operator (Python native operator); scalar arguments only | |

|

1*i,1*j,1i,1j |

1j |

complex numbers | |

|

eps |

spacing(1) |

Distance between 1 and the nearest floating point number | |

|

ode45 |

scipy.integrate.ode(f).set_integrator('dopri5') |

integrate an ODE with Runge-Kutta 4,5 | |

|

ode15s |

scipy.integrate.ode(f).\ |

integrate an ODE with BDF | |

The notation mat(...) means to use the same expression as array, but convert to matrix with the mat() type converter.

The notation asarray(...) means to use the same expression as matrix, but convert to array with the asarray() type converter.

|

MATLAB |

numpy.array |

numpy.matrix |

Notes |

|

ndims(a) |

ndim(a) or a.ndim |

get the number of dimensions of a (tensor rank) | |

|

numel(a) |

size(a) or a.size |

get the number of elements of an array | |

|

size(a) |

shape(a) or a.shape |

get the "size" of the matrix | |

|

size(a,n) |

a.shape[n-1] |

get the number of elements of the nth dimension of array a. (Note that MATLAB® uses 1 based indexing while Python uses 0 based indexing, See note 'INDEXING') | |

|

[ 1 2 3; 4 5 6 ] |

array([[1.,2.,3.], |

mat([[1.,2.,3.], |

2x3 matrix literal |

|

[ a b; c d ] |

vstack([hstack([a,b]), |

bmat('a b; c d') |

construct a matrix from blocks a,b,c, and d |

|

a(end) |

a[-1] |

a[:,-1][0,0] |

access last element in the 1xn matrix a |

|

a(2,5) |

a[1,4] |

access element in second row, fifth column | |

|

a(2,:) |

a[1] or a[1,:] |

entire second row of a | |

|

a(1:5,:) |

a[0:5] or a[:5] or a[0:5,:] |

the first five rows of a | |

|

a(end-4:end,:) |

a[-5:] |

the last five rows of a | |

|

a(1:3,5:9) |

a[0:3][:,4:9] |

rows one to three and columns five to nine of a. This gives read-only access. | |

|

a([2,4,5],[1,3]) |

a[ix_([1,3,4],[0,2])] |

rows 2,4 and 5 and columns 1 and 3. This allows the matrix to be modified, and doesn't require a regular slice. | |

|

a(3:2:21,:) |

a[ 2:21:2,:] |

every other row of a, starting with the third and going to the twenty-first | |

|

a(1:2:end,:) |

a[ ::2,:] |

every other row of a, starting with the first | |

|

a(end:-1:1,:) or flipud(a) |

a[ ::-1,:] |

a with rows in reverse order | |

|

a([1:end 1],:) |

a[r_[:len(a),0]] |

a with copy of the first row appended to the end | |

|

a.' |

a.transpose() or a.T |

transpose of a | |

|

a' |

a.conj().transpose() or a.conj().T |

a.H |

conjugate transpose of a |

|

a * b |

dot(a,b) |

a * b |

matrix multiply |

|

a .* b |

a * b |

multiply(a,b) |

element-wise multiply |

|

a./b |

a/b |

element-wise divide | |

|

a.^3 |

a**3 |

power(a,3) |

element-wise exponentiation |

|

(a>0.5) |

(a>0.5) |

matrix whose i,jth element is (a_ij > 0.5) | |

|

find(a>0.5) |

nonzero(a>0.5) |

find the indices where (a > 0.5) | |

|

a(:,find(v>0.5)) |

a[:,nonzero(v>0.5)[0]] |

a[:,nonzero(v.A>0.5)[0]] |

extract the columms of a where vector v > 0.5 |

|

a(:,find(v>0.5)) |

a[:,v.T>0.5] |

a[:,v.T>0.5)] |

extract the columms of a where column vector v > 0.5 |

|

a(a<0.5)=0 |

a[a<0.5]=0 |

a with elements less than 0.5 zeroed out | |

|

a .* (a>0.5) |

a * (a>0.5) |

mat(a.A * (a>0.5).A) |

a with elements less than 0.5 zeroed out |

|

a(:) = 3 |

a[:] = 3 |

set all values to the same scalar value | |

|

y=x |

y = x.copy() |

numpy assigns by reference | |

|

y=x(2,:) |

y = x[1,:].copy() |

numpy slices are by reference | |

|

y=x(:) |

y = x.flatten(1) |

turn array into vector (note that this forces a copy) | |

|

1:10 |

arange(1.,11.) or |

mat(arange(1.,11.)) or |

create an increasing vector see note 'RANGES' |

|

0:9 |

arange(10.) or |

mat(arange(10.)) or |

create an increasing vector see note 'RANGES' |

|

[1:10]' |

arange(1.,11.)[:, newaxis] |

r_[1.:11.,'c'] |

create a column vector |

|

zeros(3,4) |

zeros((3,4)) |

mat(...) |

3x4 rank-2 array full of 64-bit floating point zeros |

|

zeros(3,4,5) |

zeros((3,4,5)) |

mat(...) |

3x4x5 rank-3 array full of 64-bit floating point zeros |

|

ones(3,4) |

ones((3,4)) |

mat(...) |

3x4 rank-2 array full of 64-bit floating point ones |

|

eye(3) |

eye(3) |

mat(...) |

3x3 identity matrix |

|

diag(a) |

diag(a) |

mat(...) |

vector of diagonal elements of a |

|

diag(a,0) |

diag(a,0) |

mat(...) |

square diagonal matrix whose nonzero values are the elements of a |

|

rand(3,4) |

random.rand(3,4) |

mat(...) |

random 3x4 matrix |

|

linspace(1,3,4) |

linspace(1,3,4) |

mat(...) |

4 equally spaced samples between 1 and 3, inclusive |

|

[x,y]=meshgrid(0:8,0:5) |

mgrid[0:9.,0:6.] or |

mat(...) |

two 2D arrays: one of x values, the other of y values |

|

ogrid[0:9.,0:6.] or |

mat(...) |

the best way to eval functions on a grid | |

|

[x,y]=meshgrid([1,2,4],[2,4,5]) |

meshgrid([1,2,4],[2,4,5]) |

mat(...) |

|

|

ix_([1,2,4],[2,4,5]) |

mat(...) |

the best way to eval functions on a grid | |

|

repmat(a, m, n) |

tile(a, (m, n)) |

mat(...) |

create m by n copies of a |

|

[a b] |

concatenate((a,b),1) or |

concatenate((a,b),1) |

concatenate columns of a and b |

|

[a; b] |

concatenate((a,b)) or |

concatenate((a,b)) |

concatenate rows of a and b |

|

max(max(a)) |

a.max() |

maximum element of a (with ndims(a)<=2 for matlab) | |

|

max(a) |

a.max(0) |

maximum element of each column of matrix a | |

|

max(a,[],2) |

a.max(1) |

maximum element of each row of matrix a | |

|

max(a,b) |

maximum(a, b) |

compares a and b element-wise, and returns the maximum value from each pair | |

|

norm(v) |

sqrt(dot(v,v)) or |

sqrt(dot(v.A,v.A)) or |

L2 norm of vector v |

|

a & b |

logical_and(a,b) |

element-by-element AND operator (Numpy ufunc) see note 'LOGICOPS' | |

|

a | b |

logical_or(a,b) |

element-by-element OR operator (Numpy ufunc) see note 'LOGICOPS' | |

|

bitand(a,b) |

a & b |

bitwise AND operator (Python native and Numpy ufunc) | |

|

bitor(a,b) |

a | b |

bitwise OR operator (Python native and Numpy ufunc) | |

|

inv(a) |

linalg.inv(a) |

inverse of square matrix a | |

|

pinv(a) |

linalg.pinv(a) |

pseudo-inverse of matrix a | |

|

rank(a) |

linalg.matrix_rank(a) |

rank of a matrix a | |

|

a\b |

linalg.solve(a,b) if a is square |

solution of a x = b for x | |

|

b/a |

Solve a.T x.T = b.T instead |

solution of x a = b for x | |

|

[U,S,V]=svd(a) |

U, S, Vh = linalg.svd(a), V = Vh.T |

singular value decomposition of a | |

|

chol(a) |

linalg.cholesky(a).T |

cholesky factorization of a matrix (chol(a) in matlab returns an upper triangular matrix, but linalg.cholesky(a) returns a lower triangular matrix) | |

|

[V,D]=eig(a) |

D,V = linalg.eig(a) |

eigenvalues and eigenvectors of a | |

|

[V,D]=eig(a,b) |

V,D = Sci.linalg.eig(a,b) |

eigenvalues and eigenvectors of a,b | |

|

[V,D]=eigs(a,k) |

find the k largest eigenvalues and eigenvectors of a | ||

|

[Q,R,P]=qr(a,0) |

Q,R = Sci.linalg.qr(a) |

mat(...) |

QR decomposition |

|

[L,U,P]=lu(a) |

L,U = Sci.linalg.lu(a) or |

mat(...) |

LU decomposition (note: P(Matlab) == transpose(P(numpy)) ) |

|

conjgrad |

Sci.linalg.cg |

mat(...) |

Conjugate gradients solver |

|

fft(a) |

fft(a) |

mat(...) |

Fourier transform of a |

|

ifft(a) |

ifft(a) |

mat(...) |

inverse Fourier transform of a |

|

sort(a) |

sort(a) or a.sort() |

mat(...) |

sort the matrix |

|

[b,I] = sortrows(a,i) |

I = argsort(a[:,i]), b=a[I,:] |

sort the rows of the matrix | |

|

regress(y,X) |

linalg.lstsq(X,y) |

multilinear regression | |

|

decimate(x, q) |

Sci.signal.resample(x, len(x)/q) |

downsample with low-pass filtering | |

|

unique(a) |

unique(a) |

||

|

squeeze(a) |

a.squeeze() |

||

Submatrix: Assignment to a submatrix can be done with lists of indexes using the ix_ command. E.g., for 2d array a, one might do: ind=[1,3]; a[np.ix_(ind,ind)]+=100.

HELP: There is no direct equivalent of MATLAB's which command, but the commands help and source will usually list the filename where the function is located. Python also has an inspect module (do import inspect) which provides a getfile that often works.

INDEXING: MATLAB® uses one based indexing, so the initial element of a sequence has index 1. Python uses zero based indexing, so the initial element of a sequence has index 0. Confusion and flamewars arise because each has advantages and disadvantages. One based indexing is consistent with common human language usage, where the "first" element of a sequence has index 1. Zero based indexing simplifies indexing. See also a text by prof.dr. Edsger W. Dijkstra.

RANGES: In MATLAB®, 0:5 can be used as both a range literal and a 'slice' index (inside parentheses); however, in Python, constructs like 0:5 can only be used as a slice index (inside square brackets). Thus the somewhat quirky r_ object was created to allow numpy to have a similarly terse range construction mechanism. Note that r_ is not called like a function or a constructor, but rather indexed using square brackets, which allows the use of Python's slice syntax in the arguments.

LOGICOPS: & or | in Numpy is bitwise AND/OR, while in Matlab & and | are logical AND/OR. The difference should be clear to anyone with significant programming experience. The two can appear to work the same, but there are important differences. If you would have used Matlab's & or | operators, you should use the Numpy ufuncs logical_and/logical_or. The notable differences between Matlab's and Numpy's & and | operators are:

Non-logical {0,1} inputs: Numpy's output is the bitwise AND of the inputs. Matlab treats any non-zero value as 1 and returns the logical AND. For example (3 & 4) in Numpy is 0, while in Matlab both 3 and 4 are considered logical true and (3 & 4) returns 1.

Precedence: Numpy's & operator is higher precedence than logical operators like < and >; Matlab's is the reverse.

If you know you have boolean arguments, you can get away with using Numpy's bitwise operators, but be careful with parentheses, like this: z = (x > 1) & (x < 2). The absence of Numpy operator forms of logical_and and logical_or is an unfortunate consequence of Python's design.

RESHAPE and LINEAR INDEXING: Matlab always allows multi-dimensional arrays to be accessed using scalar or linear indices, Numpy does not. Linear indices are common in Matlab programs, e.g. find() on a matrix returns them, whereas Numpy's find behaves differently. When converting Matlab code it might be necessary to first reshape a matrix to a linear sequence, perform some indexing operations and then reshape back. As reshape (usually) produces views onto the same storage, it should be possible to do this fairly efficiently. Note that the scan order used by reshape in Numpy defaults to the 'C' order, whereas Matlab uses the Fortran order. If you are simply converting to a linear sequence and back this doesn't matter. But if you are converting reshapes from Matlab code which relies on the scan order, then this Matlab code: z = reshape(x,3,4); should become z = x.reshape(3,4,order='F').copy() in Numpy.

In MATLAB® the main tool available to you for customizing the environment is to modify the search path with the locations of your favorite functions. You can put such customizations into a startup script that MATLAB will run on startup.

NumPy, or rather Python, has similar facilities.

To modify your Python search path to include the locations of your own modules, define the PYTHONPATH environment variable.

To have a particular script file executed when the interactive Python interpreter is started, define the PYTHONSTARTUP environment variable to contain the name of your startup script.

Unlike MATLAB®, where anything on your path can be called immediately, with Python you need to first do an 'import' statement to make functions in a particular file accessible.

For example you might make a startup script that looks like this (Note: this is just an example, not a statement of "best practices"):

# Make all numpy available via shorter 'num' prefix

import numpy as num

# Make all matlib functions accessible at the top level via M.func()

import numpy.matlib as M

# Make some matlib functions accessible directly at the top level via, e.g. rand(3,3)

from numpy.matlib import rand,zeros,ones,empty,eye

# Define a Hermitian function

def hermitian(A, **kwargs):

return num.transpose(A,**kwargs).conj()

# Make some shorcuts for transpose,hermitian:

# num.transpose(A) --> T(A)

# hermitian(A) --> H(A)

T = num.transpose

H = hermitian

Plotting: matplotlib provides a workalike interface for 2D plotting; Mayavi provides 3D plotting

Symbolic calculation: swiginac appears to be the most complete current option. sympy is a project aiming at bringing the basic symbolic calculus functionalities to Python. Also to be noted is PyDSTool which provides some basic symbolic functionality.

Linear algebra: scipy.linalg provides the LAPACK routines

Interpolation: [/ScipyPackages/Interpolate scipy.interpolate] provides several spline interpolation tools

Numerical integration: scipy.integrate provides several tools for integrating functions as well as some basic ODE integrators. Convert XML vector field specifications automatically using VFGEN.

Dynamical systems: PyDSTool provides a large dynamical systems and modeling package, including good ODE/DAE integrators. Convert XML vector field specifications automatically using VFGEN.

Simulink: no alternative is currently available.

See http://mathesaurus.sf.net/ for another MATLAB®/NumPy cross-reference.

See http://urapiv.wordpress.com for an open-source project (URAPIV) that attempts to move from MATLAB® to Python (PyPIV http://sourceforge.net/projects/pypiv) with SciPy / NumPy.

In order to create a programming environment similar to the one presented by MATLAB®, the following are useful:

IPython: an interactive environment with many features geared towards efficient work in typical scientific usage very similar (with some enhancments) to MATLAB® console.

Matplotlib: a 2D ploting package with a list of commands similar to the ones found in matlab. Matplotlib is very well integrated with IPython.

Spyder a free and open-source Python development environment providing a MATLAB®-like interface and experience

SPE is a good free IDE for python. Has an interactive prompt.

Eclipse: is one nice option for python code editing via the pydev plugin.

Wing IDE: a commercial IDE available for multiple platforms. The professional version has an interactive debugging prompt similar to MATLAB's.

Python(x,y) scientific and engineering development software for numerical computations, data analysis and data visualization. The installation includes, among others, Spyder, Eclipse and a lot of relevant Python modules for scientific computing.

Python Tools for Visual Studio: a rich IDE plugin for Visual Studio that supports CPython, IronPython, the IPython REPL, Debugging, Profiling, including running debugging MPI program on HPC clusters.

An extensive list of tools for scientific work with python is in the link: Topical_Software.

MATLAB® and SimuLink® are registered trademarks of The MathWorks.

CategoryCookbook CategoryTemplate CategoryTemplate CategoryCookbook CategorySciPyPackages CategoryCategory

SciPy: NumPy_for_Matlab_Users (last edited 2015-10-24 17:48:26 by anonymous)

| Deep Learning Lecture Collection (Spring 2017): http://www.youtube.com/playlist?list=PL3FW7Lu3i5JvHM8ljYj-zLfQRF3EO8sYv (0) | 2017.08.13 |

|---|---|

| Learn Python in 3 days : Step by Step Guide - Data Science Central (0) | 2017.06.11 |

| Python Numpy Tutorial (0) | 2017.05.29 |

| Videos to learn about AI, Machine Learning & Deep Learning (0) | 2017.05.09 |

| 초짜 대학원생의 입장에서 이해하는 GAN 시리즈 (0) | 2017.04.02 |

This tutorial was contributed by Justin Johnson.

We will use the Python programming language for all assignments in this course. Python is a great general-purpose programming language on its own, but with the help of a few popular libraries (numpy, scipy, matplotlib) it becomes a powerful environment for scientific computing.

We expect that many of you will have some experience with Python and numpy; for the rest of you, this section will serve as a quick crash course both on the Python programming language and on the use of Python for scientific computing.

Some of you may have previous knowledge in Matlab, in which case we also recommend the numpy for Matlab users page.

You can also find an IPython notebook version of this tutorial here created by Volodymyr Kuleshov and Isaac Caswell for CS 228.

Table of contents:

Python is a high-level, dynamically typed multiparadigm programming language. Python code is often said to be almost like pseudocode, since it allows you to express very powerful ideas in very few lines of code while being very readable. As an example, here is an implementation of the classic quicksort algorithm in Python:

def quicksort(arr):

if len(arr) <= 1:

return arr

pivot = arr[len(arr) / 2]

left = [x for x in arr if x < pivot]

middle = [x for x in arr if x == pivot]

right = [x for x in arr if x > pivot]

return quicksort(left) + middle + quicksort(right)

print quicksort([3,6,8,10,1,2,1])

# Prints "[1, 1, 2, 3, 6, 8, 10]"

There are currently two different supported versions of Python, 2.7 and 3.4. Somewhat confusingly, Python 3.0 introduced many backwards-incompatible changes to the language, so code written for 2.7 may not work under 3.4 and vice versa. For this class all code will use Python 2.7.

You can check your Python version at the command line by running python --version.

Like most languages, Python has a number of basic types including integers, floats, booleans, and strings. These data types behave in ways that are familiar from other programming languages.

Numbers: Integers and floats work as you would expect from other languages:

x = 3

print type(x) # Prints "<type 'int'>"

print x # Prints "3"

print x + 1 # Addition; prints "4"

print x - 1 # Subtraction; prints "2"

print x * 2 # Multiplication; prints "6"

print x ** 2 # Exponentiation; prints "9"

x += 1

print x # Prints "4"

x *= 2

print x # Prints "8"

y = 2.5

print type(y) # Prints "<type 'float'>"

print y, y + 1, y * 2, y ** 2 # Prints "2.5 3.5 5.0 6.25"

Note that unlike many languages, Python does not have unary increment (x++) or decrement (x--) operators.

Python also has built-in types for long integers and complex numbers; you can find all of the details in the documentation.

Booleans: Python implements all of the usual operators for Boolean logic, but uses English words rather than symbols (&&, ||, etc.):

t = True

f = False

print type(t) # Prints "<type 'bool'>"

print t and f # Logical AND; prints "False"

print t or f # Logical OR; prints "True"

print not t # Logical NOT; prints "False"

print t != f # Logical XOR; prints "True"

Strings: Python has great support for strings:

hello = 'hello' # String literals can use single quotes

world = "world" # or double quotes; it does not matter.

print hello # Prints "hello"

print len(hello) # String length; prints "5"

hw = hello + ' ' + world # String concatenation

print hw # prints "hello world"

hw12 = '%s %s %d' % (hello, world, 12) # sprintf style string formatting

print hw12 # prints "hello world 12"

String objects have a bunch of useful methods; for example:

s = "hello"

print s.capitalize() # Capitalize a string; prints "Hello"

print s.upper() # Convert a string to uppercase; prints "HELLO"

print s.rjust(7) # Right-justify a string, padding with spaces; prints " hello"

print s.center(7) # Center a string, padding with spaces; prints " hello "

print s.replace('l', '(ell)') # Replace all instances of one substring with another;

# prints "he(ell)(ell)o"

print ' world '.strip() # Strip leading and trailing whitespace; prints "world"

You can find a list of all string methods in the documentation.

Python includes several built-in container types: lists, dictionaries, sets, and tuples.

A list is the Python equivalent of an array, but is resizeable and can contain elements of different types:

xs = [3, 1, 2] # Create a list

print xs, xs[2] # Prints "[3, 1, 2] 2"

print xs[-1] # Negative indices count from the end of the list; prints "2"

xs[2] = 'foo' # Lists can contain elements of different types

print xs # Prints "[3, 1, 'foo']"

xs.append('bar') # Add a new element to the end of the list

print xs # Prints "[3, 1, 'foo', 'bar']"

x = xs.pop() # Remove and return the last element of the list

print x, xs # Prints "bar [3, 1, 'foo']"

As usual, you can find all the gory details about lists in the documentation.

Slicing: In addition to accessing list elements one at a time, Python provides concise syntax to access sublists; this is known as slicing:

nums = range(5) # range is a built-in function that creates a list of integers

print nums # Prints "[0, 1, 2, 3, 4]"

print nums[2:4] # Get a slice from index 2 to 4 (exclusive); prints "[2, 3]"

print nums[2:] # Get a slice from index 2 to the end; prints "[2, 3, 4]"

print nums[:2] # Get a slice from the start to index 2 (exclusive); prints "[0, 1]"

print nums[:] # Get a slice of the whole list; prints "[0, 1, 2, 3, 4]"

print nums[:-1] # Slice indices can be negative; prints "[0, 1, 2, 3]"

nums[2:4] = [8, 9] # Assign a new sublist to a slice

print nums # Prints "[0, 1, 8, 9, 4]"

We will see slicing again in the context of numpy arrays.

Loops: You can loop over the elements of a list like this:

animals = ['cat', 'dog', 'monkey']

for animal in animals:

print animal

# Prints "cat", "dog", "monkey", each on its own line.

If you want access to the index of each element within the body of a loop, use the built-in enumerate function:

animals = ['cat', 'dog', 'monkey']

for idx, animal in enumerate(animals):

print '#%d: %s' % (idx + 1, animal)

# Prints "#1: cat", "#2: dog", "#3: monkey", each on its own line

List comprehensions: When programming, frequently we want to transform one type of data into another. As a simple example, consider the following code that computes square numbers:

nums = [0, 1, 2, 3, 4]

squares = []

for x in nums:

squares.append(x ** 2)

print squares # Prints [0, 1, 4, 9, 16]

You can make this code simpler using a list comprehension:

nums = [0, 1, 2, 3, 4]

squares = [x ** 2 for x in nums]

print squares # Prints [0, 1, 4, 9, 16]

List comprehensions can also contain conditions:

nums = [0, 1, 2, 3, 4]

even_squares = [x ** 2 for x in nums if x % 2 == 0]

print even_squares # Prints "[0, 4, 16]"

A dictionary stores (key, value) pairs, similar to a Map in Java or an object in Javascript. You can use it like this:

d = {'cat': 'cute', 'dog': 'furry'} # Create a new dictionary with some data

print d['cat'] # Get an entry from a dictionary; prints "cute"

print 'cat' in d # Check if a dictionary has a given key; prints "True"

d['fish'] = 'wet' # Set an entry in a dictionary

print d['fish'] # Prints "wet"

# print d['monkey'] # KeyError: 'monkey' not a key of d

print d.get('monkey', 'N/A') # Get an element with a default; prints "N/A"

print d.get('fish', 'N/A') # Get an element with a default; prints "wet"

del d['fish'] # Remove an element from a dictionary

print d.get('fish', 'N/A') # "fish" is no longer a key; prints "N/A"

You can find all you need to know about dictionaries in the documentation.

Loops: It is easy to iterate over the keys in a dictionary:

d = {'person': 2, 'cat': 4, 'spider': 8}

for animal in d:

legs = d[animal]

print 'A %s has %d legs' % (animal, legs)

# Prints "A person has 2 legs", "A cat has 4 legs", "A spider has 8 legs"

If you want access to keys and their corresponding values, use the iteritems method:

d = {'person': 2, 'cat': 4, 'spider': 8}

for animal, legs in d.iteritems():

print 'A %s has %d legs' % (animal, legs)

# Prints "A person has 2 legs", "A cat has 4 legs", "A spider has 8 legs"

Dictionary comprehensions: These are similar to list comprehensions, but allow you to easily construct dictionaries. For example:

nums = [0, 1, 2, 3, 4]

even_num_to_square = {x: x ** 2 for x in nums if x % 2 == 0}

print even_num_to_square # Prints "{0: 0, 2: 4, 4: 16}"

A set is an unordered collection of distinct elements. As a simple example, consider the following:

animals = {'cat', 'dog'}

print 'cat' in animals # Check if an element is in a set; prints "True"

print 'fish' in animals # prints "False"

animals.add('fish') # Add an element to a set

print 'fish' in animals # Prints "True"

print len(animals) # Number of elements in a set; prints "3"

animals.add('cat') # Adding an element that is already in the set does nothing

print len(animals) # Prints "3"

animals.remove('cat') # Remove an element from a set

print len(animals) # Prints "2"

As usual, everything you want to know about sets can be found in the documentation.

Loops: Iterating over a set has the same syntax as iterating over a list; however since sets are unordered, you cannot make assumptions about the order in which you visit the elements of the set:

animals = {'cat', 'dog', 'fish'}

for idx, animal in enumerate(animals):

print '#%d: %s' % (idx + 1, animal)

# Prints "#1: fish", "#2: dog", "#3: cat"

Set comprehensions: Like lists and dictionaries, we can easily construct sets using set comprehensions:

from math import sqrt

nums = {int(sqrt(x)) for x in range(30)}

print nums # Prints "set([0, 1, 2, 3, 4, 5])"

A tuple is an (immutable) ordered list of values. A tuple is in many ways similar to a list; one of the most important differences is that tuples can be used as keys in dictionaries and as elements of sets, while lists cannot. Here is a trivial example:

d = {(x, x + 1): x for x in range(10)} # Create a dictionary with tuple keys

t = (5, 6) # Create a tuple

print type(t) # Prints "<type 'tuple'>"

print d[t] # Prints "5"

print d[(1, 2)] # Prints "1"

The documentation has more information about tuples.

Python functions are defined using the def keyword. For example:

def sign(x):

if x > 0:

return 'positive'

elif x < 0:

return 'negative'

else:

return 'zero'

for x in [-1, 0, 1]:

print sign(x)

# Prints "negative", "zero", "positive"

We will often define functions to take optional keyword arguments, like this:

def hello(name, loud=False):

if loud:

print 'HELLO, %s!' % name.upper()

else:

print 'Hello, %s' % name

hello('Bob') # Prints "Hello, Bob"

hello('Fred', loud=True) # Prints "HELLO, FRED!"

There is a lot more information about Python functions in the documentation.

The syntax for defining classes in Python is straightforward:

class Greeter(object):

# Constructor

def __init__(self, name):

self.name = name # Create an instance variable

# Instance method

def greet(self, loud=False):

if loud:

print 'HELLO, %s!' % self.name.upper()

else:

print 'Hello, %s' % self.name

g = Greeter('Fred') # Construct an instance of the Greeter class

g.greet() # Call an instance method; prints "Hello, Fred"

g.greet(loud=True) # Call an instance method; prints "HELLO, FRED!"

You can read a lot more about Python classes in the documentation.

Numpy is the core library for scientific computing in Python. It provides a high-performance multidimensional array object, and tools for working with these arrays. If you are already familiar with MATLAB, you might find this tutorial useful to get started with Numpy.

A numpy array is a grid of values, all of the same type, and is indexed by a tuple of nonnegative integers. The number of dimensions is the rank of the array; the shape of an array is a tuple of integers giving the size of the array along each dimension.

We can initialize numpy arrays from nested Python lists, and access elements using square brackets:

import numpy as np

a = np.array([1, 2, 3]) # Create a rank 1 array

print type(a) # Prints "<type 'numpy.ndarray'>"

print a.shape # Prints "(3,)"

print a[0], a[1], a[2] # Prints "1 2 3"

a[0] = 5 # Change an element of the array

print a # Prints "[5, 2, 3]"

b = np.array([[1,2,3],[4,5,6]]) # Create a rank 2 array

print b.shape # Prints "(2, 3)"

print b[0, 0], b[0, 1], b[1, 0] # Prints "1 2 4"

Numpy also provides many functions to create arrays:

import numpy as np

a = np.zeros((2,2)) # Create an array of all zeros

print a # Prints "[[ 0. 0.]

# [ 0. 0.]]"

b = np.ones((1,2)) # Create an array of all ones

print b # Prints "[[ 1. 1.]]"

c = np.full((2,2), 7) # Create a constant array

print c # Prints "[[ 7. 7.]

# [ 7. 7.]]"

d = np.eye(2) # Create a 2x2 identity matrix

print d # Prints "[[ 1. 0.]

# [ 0. 1.]]"

e = np.random.random((2,2)) # Create an array filled with random values

print e # Might print "[[ 0.91940167 0.08143941]

# [ 0.68744134 0.87236687]]"

You can read about other methods of array creation in the documentation.

Numpy offers several ways to index into arrays.

Slicing: Similar to Python lists, numpy arrays can be sliced. Since arrays may be multidimensional, you must specify a slice for each dimension of the array:

import numpy as np

# Create the following rank 2 array with shape (3, 4)

# [[ 1 2 3 4]

# [ 5 6 7 8]

# [ 9 10 11 12]]

a = np.array([[1,2,3,4], [5,6,7,8], [9,10,11,12]])

# Use slicing to pull out the subarray consisting of the first 2 rows

# and columns 1 and 2; b is the following array of shape (2, 2):

# [[2 3]

# [6 7]]

b = a[:2, 1:3]

# A slice of an array is a view into the same data, so modifying it

# will modify the original array.

print a[0, 1] # Prints "2"

b[0, 0] = 77 # b[0, 0] is the same piece of data as a[0, 1]

print a[0, 1] # Prints "77"

You can also mix integer indexing with slice indexing. However, doing so will yield an array of lower rank than the original array. Note that this is quite different from the way that MATLAB handles array slicing:

import numpy as np

# Create the following rank 2 array with shape (3, 4)

# [[ 1 2 3 4]

# [ 5 6 7 8]

# [ 9 10 11 12]]

a = np.array([[1,2,3,4], [5,6,7,8], [9,10,11,12]])

# Two ways of accessing the data in the middle row of the array.

# Mixing integer indexing with slices yields an array of lower rank,

# while using only slices yields an array of the same rank as the

# original array:

row_r1 = a[1, :] # Rank 1 view of the second row of a

row_r2 = a[1:2, :] # Rank 2 view of the second row of a

print row_r1, row_r1.shape # Prints "[5 6 7 8] (4,)"

print row_r2, row_r2.shape # Prints "[[5 6 7 8]] (1, 4)"

# We can make the same distinction when accessing columns of an array:

col_r1 = a[:, 1]

col_r2 = a[:, 1:2]

print col_r1, col_r1.shape # Prints "[ 2 6 10] (3,)"

print col_r2, col_r2.shape # Prints "[[ 2]

# [ 6]

# [10]] (3, 1)"

Integer array indexing: When you index into numpy arrays using slicing, the resulting array view will always be a subarray of the original array. In contrast, integer array indexing allows you to construct arbitrary arrays using the data from another array. Here is an example:

import numpy as np

a = np.array([[1,2], [3, 4], [5, 6]])

# An example of integer array indexing.

# The returned array will have shape (3,) and

print a[[0, 1, 2], [0, 1, 0]] # Prints "[1 4 5]"

# The above example of integer array indexing is equivalent to this:

print np.array([a[0, 0], a[1, 1], a[2, 0]]) # Prints "[1 4 5]"

# When using integer array indexing, you can reuse the same

# element from the source array:

print a[[0, 0], [1, 1]] # Prints "[2 2]"

# Equivalent to the previous integer array indexing example

print np.array([a[0, 1], a[0, 1]]) # Prints "[2 2]"

One useful trick with integer array indexing is selecting or mutating one element from each row of a matrix:

import numpy as np

# Create a new array from which we will select elements

a = np.array([[1,2,3], [4,5,6], [7,8,9], [10, 11, 12]])

print a # prints "array([[ 1, 2, 3],

# [ 4, 5, 6],

# [ 7, 8, 9],

# [10, 11, 12]])"

# Create an array of indices

b = np.array([0, 2, 0, 1])

# Select one element from each row of a using the indices in b

print a[np.arange(4), b] # Prints "[ 1 6 7 11]"

# Mutate one element from each row of a using the indices in b

a[np.arange(4), b] += 10

print a # prints "array([[11, 2, 3],

# [ 4, 5, 16],

# [17, 8, 9],

# [10, 21, 12]])

Boolean array indexing: Boolean array indexing lets you pick out arbitrary elements of an array. Frequently this type of indexing is used to select the elements of an array that satisfy some condition. Here is an example:

import numpy as np

a = np.array([[1,2], [3, 4], [5, 6]])

bool_idx = (a > 2) # Find the elements of a that are bigger than 2;

# this returns a numpy array of Booleans of the same

# shape as a, where each slot of bool_idx tells

# whether that element of a is > 2.

print bool_idx # Prints "[[False False]

# [ True True]

# [ True True]]"

# We use boolean array indexing to construct a rank 1 array

# consisting of the elements of a corresponding to the True values

# of bool_idx

print a[bool_idx] # Prints "[3 4 5 6]"

# We can do all of the above in a single concise statement:

print a[a > 2] # Prints "[3 4 5 6]"

For brevity we have left out a lot of details about numpy array indexing; if you want to know more you should read the documentation.

Every numpy array is a grid of elements of the same type. Numpy provides a large set of numeric datatypes that you can use to construct arrays. Numpy tries to guess a datatype when you create an array, but functions that construct arrays usually also include an optional argument to explicitly specify the datatype. Here is an example:

import numpy as np

x = np.array([1, 2]) # Let numpy choose the datatype

print x.dtype # Prints "int64"

x = np.array([1.0, 2.0]) # Let numpy choose the datatype

print x.dtype # Prints "float64"

x = np.array([1, 2], dtype=np.int64) # Force a particular datatype

print x.dtype # Prints "int64"

You can read all about numpy datatypes in the documentation.

Basic mathematical functions operate elementwise on arrays, and are available both as operator overloads and as functions in the numpy module:

import numpy as np

x = np.array([[1,2],[3,4]], dtype=np.float64)

y = np.array([[5,6],[7,8]], dtype=np.float64)

# Elementwise sum; both produce the array

# [[ 6.0 8.0]

# [10.0 12.0]]

print x + y

print np.add(x, y)

# Elementwise difference; both produce the array

# [[-4.0 -4.0]

# [-4.0 -4.0]]

print x - y

print np.subtract(x, y)

# Elementwise product; both produce the array

# [[ 5.0 12.0]

# [21.0 32.0]]

print x * y

print np.multiply(x, y)

# Elementwise division; both produce the array

# [[ 0.2 0.33333333]

# [ 0.42857143 0.5 ]]

print x / y

print np.divide(x, y)

# Elementwise square root; produces the array

# [[ 1. 1.41421356]

# [ 1.73205081 2. ]]

print np.sqrt(x)

Note that unlike MATLAB, * is elementwise multiplication, not matrix multiplication. We instead use the dot function to compute inner products of vectors, to multiply a vector by a matrix, and to multiply matrices. dot is available both as a function in the numpy module and as an instance method of array objects:

import numpy as np

x = np.array([[1,2],[3,4]])

y = np.array([[5,6],[7,8]])

v = np.array([9,10])

w = np.array([11, 12])

# Inner product of vectors; both produce 219

print v.dot(w)

print np.dot(v, w)

# Matrix / vector product; both produce the rank 1 array [29 67]

print x.dot(v)

print np.dot(x, v)

# Matrix / matrix product; both produce the rank 2 array

# [[19 22]

# [43 50]]

print x.dot(y)

print np.dot(x, y)

Numpy provides many useful functions for performing computations on arrays; one of the most useful is sum:

import numpy as np

x = np.array([[1,2],[3,4]])

print np.sum(x) # Compute sum of all elements; prints "10"

print np.sum(x, axis=0) # Compute sum of each column; prints "[4 6]"

print np.sum(x, axis=1) # Compute sum of each row; prints "[3 7]"

You can find the full list of mathematical functions provided by numpy in the documentation.

Apart from computing mathematical functions using arrays, we frequently need to reshape or otherwise manipulate data in arrays. The simplest example of this type of operation is transposing a matrix; to transpose a matrix, simply use the T attribute of an array object:

import numpy as np

x = np.array([[1,2], [3,4]])

print x # Prints "[[1 2]

# [3 4]]"

print x.T # Prints "[[1 3]

# [2 4]]"

# Note that taking the transpose of a rank 1 array does nothing:

v = np.array([1,2,3])

print v # Prints "[1 2 3]"

print v.T # Prints "[1 2 3]"

Numpy provides many more functions for manipulating arrays; you can see the full list in the documentation.

Broadcasting is a powerful mechanism that allows numpy to work with arrays of different shapes when performing arithmetic operations. Frequently we have a smaller array and a larger array, and we want to use the smaller array multiple times to perform some operation on the larger array.

For example, suppose that we want to add a constant vector to each row of a matrix. We could do it like this:

import numpy as np

# We will add the vector v to each row of the matrix x,

# storing the result in the matrix y

x = np.array([[1,2,3], [4,5,6], [7,8,9], [10, 11, 12]])

v = np.array([1, 0, 1])

y = np.empty_like(x) # Create an empty matrix with the same shape as x

# Add the vector v to each row of the matrix x with an explicit loop

for i in range(4):

y[i, :] = x[i, :] + v

# Now y is the following

# [[ 2 2 4]

# [ 5 5 7]

# [ 8 8 10]

# [11 11 13]]

print y

This works; however when the matrix x is very large, computing an explicit loop in Python could be slow. Note that adding the vector v to each row of the matrix x is equivalent to forming a matrix vv by stacking multiple copies of v vertically, then performing elementwise summation of x and vv. We could implement this approach like this:

import numpy as np

# We will add the vector v to each row of the matrix x,

# storing the result in the matrix y

x = np.array([[1,2,3], [4,5,6], [7,8,9], [10, 11, 12]])

v = np.array([1, 0, 1])

vv = np.tile(v, (4, 1)) # Stack 4 copies of v on top of each other

print vv # Prints "[[1 0 1]

# [1 0 1]

# [1 0 1]

# [1 0 1]]"

y = x + vv # Add x and vv elementwise

print y # Prints "[[ 2 2 4

# [ 5 5 7]

# [ 8 8 10]

# [11 11 13]]"

Numpy broadcasting allows us to perform this computation without actually creating multiple copies of v. Consider this version, using broadcasting:

import numpy as np

# We will add the vector v to each row of the matrix x,

# storing the result in the matrix y

x = np.array([[1,2,3], [4,5,6], [7,8,9], [10, 11, 12]])

v = np.array([1, 0, 1])

y = x + v # Add v to each row of x using broadcasting

print y # Prints "[[ 2 2 4]

# [ 5 5 7]

# [ 8 8 10]

# [11 11 13]]"

The line y = x + v works even though x has shape (4, 3) and v has shape (3,) due to broadcasting; this line works as if v actually had shape (4, 3), where each row was a copy of v, and the sum was performed elementwise.

Broadcasting two arrays together follows these rules:

If this explanation does not make sense, try reading the explanation from the documentation or this explanation.

Functions that support broadcasting are known as universal functions. You can find the list of all universal functions in the documentation.

Here are some applications of broadcasting:

import numpy as np

# Compute outer product of vectors

v = np.array([1,2,3]) # v has shape (3,)

w = np.array([4,5]) # w has shape (2,)

# To compute an outer product, we first reshape v to be a column

# vector of shape (3, 1); we can then broadcast it against w to yield

# an output of shape (3, 2), which is the outer product of v and w:

# [[ 4 5]

# [ 8 10]

# [12 15]]

print np.reshape(v, (3, 1)) * w

# Add a vector to each row of a matrix

x = np.array([[1,2,3], [4,5,6]])

# x has shape (2, 3) and v has shape (3,) so they broadcast to (2, 3),

# giving the following matrix:

# [[2 4 6]

# [5 7 9]]

print x + v

# Add a vector to each column of a matrix

# x has shape (2, 3) and w has shape (2,).

# If we transpose x then it has shape (3, 2) and can be broadcast

# against w to yield a result of shape (3, 2); transposing this result

# yields the final result of shape (2, 3) which is the matrix x with

# the vector w added to each column. Gives the following matrix:

# [[ 5 6 7]

# [ 9 10 11]]

print (x.T + w).T

# Another solution is to reshape w to be a column vector of shape (2, 1);

# we can then broadcast it directly against x to produce the same

# output.

print x + np.reshape(w, (2, 1))

# Multiply a matrix by a constant:

# x has shape (2, 3). Numpy treats scalars as arrays of shape ();

# these can be broadcast together to shape (2, 3), producing the

# following array:

# [[ 2 4 6]

# [ 8 10 12]]

print x * 2

Broadcasting typically makes your code more concise and faster, so you should strive to use it where possible.

This brief overview has touched on many of the important things that you need to know about numpy, but is far from complete. Check out the numpy reference to find out much more about numpy.

Numpy provides a high-performance multidimensional array and basic tools to compute with and manipulate these arrays. SciPy builds on this, and provides a large number of functions that operate on numpy arrays and are useful for different types of scientific and engineering applications.

The best way to get familiar with SciPy is to browse the documentation. We will highlight some parts of SciPy that you might find useful for this class.

SciPy provides some basic functions to work with images. For example, it has functions to read images from disk into numpy arrays, to write numpy arrays to disk as images, and to resize images. Here is a simple example that showcases these functions:

from scipy.misc import imread, imsave, imresize

# Read an JPEG image into a numpy array

img = imread('assets/cat.jpg')

print img.dtype, img.shape # Prints "uint8 (400, 248, 3)"

# We can tint the image by scaling each of the color channels

# by a different scalar constant. The image has shape (400, 248, 3);

# we multiply it by the array [1, 0.95, 0.9] of shape (3,);